Delving into the world of Continuous Integration and Continuous Deployment (CI/CD) offers both challenges and revelations. As a CI/CD engineer at Puzzle ITC, I’ve grappled with intricate pipelines, elusive debugging scenarios, and the complexities of monorepos. This post, the first in a three-part series, unfolds my journey, highlighting common hurdles and the pressing need for more refined tools in our arsenal. Join me as we explore the nuances of CI/CD and its evolving landscape.

The Legacy

I work as a CI/CD engineer at Puzzle ITC. My daily responsibilities involve supporting our development and operations teams by managing their pipelines. These pipelines range from straightforward Docker image builds to intricate processes that include build tasks, rigorous testing, security scanning, integrated end-to-end testing, and other associated activities. While some might label my role as »DevOps«, I see it more as a cultural shift than just a job title. I don’t strictly identify as a developer or a platform operator; instead, I view myself as the bridge that links both teams. Occasionally, I even describe my role as that of a mediator for both groups, but that’s a topic for another discussion.

Over the past year, I’ve been deeply involved in a significant project. This project has a Ruby-On-Rails backend that integrates various OpenGIS components and Python elements. Moreover, the same repository hosts a frontend developed in TypeScript. The team efficiently uses Gitlab CI to handle their CI/CD pipelines.

Due to my extensive involvement in this project and my experience as a consultant, I often face challenges that recur regardless of the CI tool in use. Having worked extensively with Jenkins, Gitlab CI, Github Actions, and Tekton, I’ve noticed that most issues are consistent across these platforms.

Fail Fast / Fast Feedback

»Fail Fast« or »Fast Feedback« is a term often used to describe the speed at which software developers receive feedback from the pipeline. For instance, if you commit a file with an error, waiting for an hour to get notified of the issue isn’t ideal. However, as a CI/CD engineer responsible for pipeline development, I interpret »Fast Feedback« as the speed at which I get feedback regarding changes I’ve made to the pipeline itself.

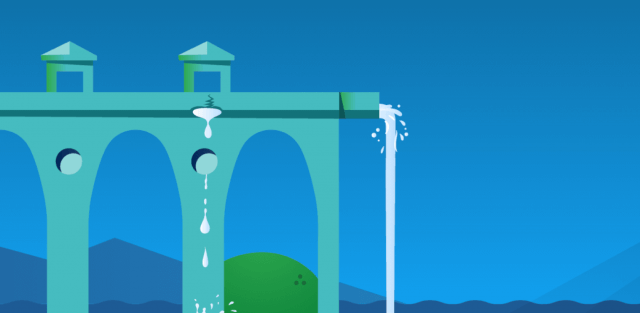

To illustrate this point, let’s look at the visual representation of the Gitlab Pipeline depicted in the figure below. This pipeline consists of multiple stages, with each stage containing a set of jobs. Certain jobs rely on the successful completion of others from preceding stages. By default, Gitlab runs all jobs within a stage simultaneously, and it processes all stages in sequence.

Suppose we encounter an unexpected error in one of the later stages, such as the »test:erd« job in the test stage. After identifying and correcting the issue in the gitlab-ci.yml file, we commit and push the changes. Yet, we end up waiting for all preceding dependencies to finish before the »test:erd« job even begins. This wait prolongs the confirmation of our changes, making the process inefficient and not giving rapid feedback.

After making changes to the gitlab-ci.yml for the »test:erd« job, it takes on average 7m 25s before we receive feedback from the pipeline.

test:erd: image: "$RAILS_TEST_IMAGE" stage: lint needs: - job: build:rails_test_image allow_failure: true script: - bundle exec rake db:setup erd - exit 123 #<<<----- Add new line with simulated error

This issue isn’t unique to Gitlab; it’s also observed in other tools like Tekton and Github Actions. The core problem is that job execution isn’t cached, leaving you with two choices: either run the entire pipeline until you reach your specific point of interest or predetermine rules to specify which jobs should execute under certain conditions.

Furthermore, many CI tools that manage pipelines are closely tied to their designated backend infrastructure. For instance, Gitlab CI depends on a Gitlab instance, whereas Tekton needs a fully-fledged Kubernetes cluster to operate. Consequently, your daily routine often revolves around a repetitive cycle: make changes, commit them, wait for the pipeline to run, validate the results, and then start the cycle anew.

Monitoring and Observability

Several months ago, one of our development teams brought to our attention a significant drop in pipeline performance over the preceding days. At that point, we only knew about the specific pipelines and jobs impacted by the performance degradation. Have you ever had to probe performance issues in GitLab jobs, particularly within Kubernetes environments where pods are initiated by a GitLab Runner? Pinpointing the singular job causing issues amid thousands of pods—and in turn affecting all other pipelines—is no small feat.

From my perspective, many CI tools fall short when it comes to providing robust solutions for diving deep into pipeline insights. Users often find themselves sifting through multiple UI pages or querying endpoints, only to then manually compile all the relevant data. Advanced observability solutions, such as OpenTelemetry, are glaringly absent. Although tools like gitlab-ci-pipelines-exporter offer some assistance in garnering more insights, seamlessly fusing job execution data with resource monitoring information remains a tough endeavor.

Debugging

Embarking on debugging a GitLab CI build can be quite an adventure. I’ve navigated this terrain extensively, especially during our GitLab Runner’s migration from Rancher to OpenShift. OpenShift’s tightened security model brought forth challenges: numerous jobs failed because of incorrect file permissions or the newfound inability to run as the root user. In some cases, fixing the builds entailed adding just a line or two to the Dockerfile or the GitLab CI configuration. However, there were times when a more in-depth analysis was necessary. It was during these moments that I found the need to live-debug certain jobs, instead of making blind alterations and repeatedly triggering the pipeline.

Still, the act of debugging GitLab CI jobs is far from simple. One crucial aspect to grasp is that all pipeline jobs operate within pods housed on our OpenShift platform. This setup makes the build context fleeting — as soon as a job finishes, its corresponding pod vanishes. Here’s how I tackle debugging in a GitLab pipeline:

The initial step involves ensuring that the pod remains active during the script block’s execution. A straightforward method to achieve this is by embedding a sleep 1200 command within the job’s script block. With this in place, we can initiate a remote shell connection to the container within the pod, delve into the build context, explore the file system, and run commands to study their effects firsthand.

test:erd: image: "$RAILS_TEST_IMAGE" stage: lint needs: - job: build:rails_test_image allow_failure: true script: - bundle exec rake db:setup erd - sleep 1200 #<<<----- "Breakpoint" like 1990 - exit 123 #<<<----- Simulated error

Of course, this method provides an avenue to debug your pipeline. Yet, when compared with software development—where genuine debuggers have existed for decades—it seems decidedly obsolete.

Templating/Reusability

Most CI tools, including Gitlab and Tekton, offer functionalities to craft templates, enhancing reusability. However, drafting templates can often feel even more intricate than scripting with bash. Templates typically lack a robust type system or exception handling. [To be fair, bash doesn’t truly offer these either, though many templates possess rudimentary type systems with basic types.] Frequently, you might find yourself resorting to bash commands to introduce flow control.

The recommended approach to formulating Gitlab templates involves devising an additional YAML file and incorporating it into your pipeline. When fashioning a job template, it’s customary to configure it using environment variables. One salient challenge with templates is discerning the right configuration methodology. You’re left with two choices: painstakingly create manual documentation or sift through the template code. Regrettably, unlike the majority of programming languages, there’s no mechanism to auto-generate documentation akin to javadoc to proficiently detail your templates.

Vendor Lock-In

Throughout my journey, I’ve encountered several projects anchored to legacy CI tools like Jenkins. Yet, its dated architecture and security vulnerabilities often necessitate transitions to more contemporary platforms, such as GitLab CI or GitHub Actions. This migration frequently mandates reconstructing the entire pipeline, adopting an altogether different language or syntax. This is despite the fact that much of the pipeline’s core logic and execution might already be encapsulated within container images. So, while the underlying processes of a pipeline might be containerized, shifting it to a distinct CI system can still be an difficult undertaking.

Monorepos

Developers often have a liking for monorepos, while CI/CD Engineers tend to view them with skepticism. In a Git monorepo, every project or component is threaded together by a shared history. This cohesiveness facilitates streamlined tracking of changes and dependencies across the codebase. Moreover, it simplifies dependency management between projects and fosters seamless code sharing and reuse across varied segments of the codebase.

Nevertheless, Git monorepos are not without their pitfalls. They can amplify the complexity of the CI/CD Pipeline and pave the way for bloated repository sizes. Consequently, it’s crucial to weigh the pros against the cons before embracing a Git monorepo setup for your development operations.

From where I stand, many CI tools fall short when it comes to adeptly managing and building artifacts stemming from monorepos. The core challenge lies in discerning which repository segments are interdependent, as well as pinpointing instances where only specific facets—like the frontend or backend—demand building, testing, or releasing.

Given our current toolkit, we’re squandering substantial computational resources and precious time. Ideally, crafting pipelines should be an intuitive venture, but more often than not, it weirdly resembles a game of hit and miss.

What’s next?

In the upcoming second part of this series, we’ll delve into the innovative technology of Dagger. Introduced to me in May 2022, Dagger offers a tantalizing escape from the shackles of vendor lock-in, merging a trio of components: the Dagger SDK, Engine, and an OCI-compatible runtime. Join me as we explore its unique approach to CI, from the integration of its SDK to the concurrent operation of its cleverly crafted Directed Acyclic Graphs (DAGs). Stay tuned!