In this blog post I would like to highlight the required steps to manually copy/migrate data from an existing Kubernetes PersistentVolume (PV) into a new, empty PV. It’s meant to be a step-by-step guide for novice Kubernetes users/administrators, but can also serve as an example to learn what exactly a reclaim policy on a PV actually does.

Motivation

Migrating from an old StorageClass or storage backend to a new one is one common motivation for such a copy task. Furthermore, these steps are also required if a PV is running low on free space, and the corresponding Kubernetes CSI driver does not support VolumeExpansion.

From a bird’s eye view, here’s what we need to do: Scale the application down to 0 replicas, replicate the old PV data to the new PV, create a new PVC to “inject” the new PV into the application, scale the application back to the original replica count, and finally delete the old PV if it’s not needed anymore. While juggling around the PVCs, we also need to prevent Kubernetes from prematurely wiping any temporarily unreferenced PVs.

Before we get started for real, please keep in mind that this guide shows how to copy data from one PV to another in the most manual way possible. Depending on the actual setup, CSI driver, and storage backend, it may be easier to do in practice, since some CSI drivers or storage backends offer helpful data migration possibilities to facilitate such scenarios.

Step-by-Step Guide

First, dump the YAML manifests of the old PV and its bound PersistentVolumeClaim (PVC):

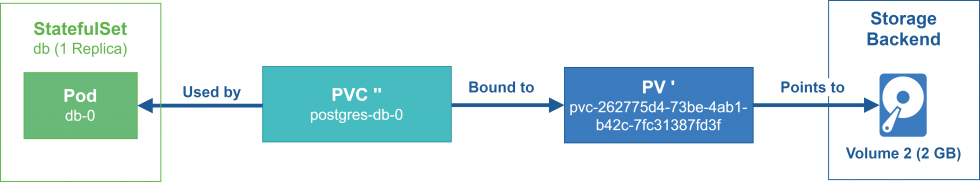

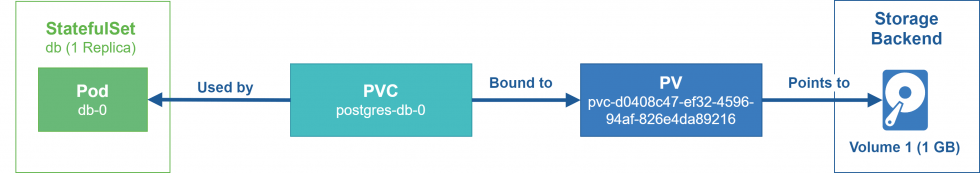

user@host:/tmp$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE postgres-db-0 Bound pvc-d0408c47-ef32-4596-94af-826e4da89216 1Gi RWO sc-default 2m10s user@host:/tmp$ kubectl get pv pvc-d0408c47-ef32-4596-94af-826e4da89216 -o yaml > pvc-d0408c47-ef32-4596-94af-826e4da89216.yaml user@host:/tmp$ kubectl get pvc postgres-db-0 -o yaml > postgres-db-0.yaml

Make sure no application workloads actively access the PVs, by scaling their replication count to 0. That’s so the PV can be mounted in a new datacopy Pod (in case of a ReadWriteOnce PV), and without running the risk of corrupting the old PV data during the copying process due to concurrent access (in case of a ReadWriteMany PV):

user@host:/tmp$ kubectl describe pvc postgres-db-0 Name: postgres-db-0 Namespace: my-namespace StorageClass: sc-default Status: Bound Volume: pvc-d0408c47-ef32-4596-94af-826e4da89216 ... Capacity: 1Gi Access Modes: RWO VolumeMode: Filesystem Used By: db-0 ... user@host:/tmp$ kubectl get statefulsets NAME READY AGE db 1/1 4m4s user@host:/tmp$ kubectl scale statefulset --replicas=0 db statefulset.apps/db scaled user@host:/tmp$ kubectl get statefulsets NAME READY AGE db 0/0 4m28s

Create a manifest for a new PVC on the new StorageClass, storage backend or with a larger size (depending on your use case), eg. the following pvc_postgre-new.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: new-postgres-db-0

namespace: my-namespace

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: sc-default

Apply the manifest and wait a few seconds until its status shows Bound:

user@host:/tmp$ kubectl apply -f pvc_postgre-new.yaml user@host:/tmp$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE new-postgres-db-0 Bound pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f 2Gi RWO sc-default 12s postgres-db-0 Bound pvc-d0408c47-ef32-4596-94af-826e4da89216 1Gi RWO sc-default 6m4s

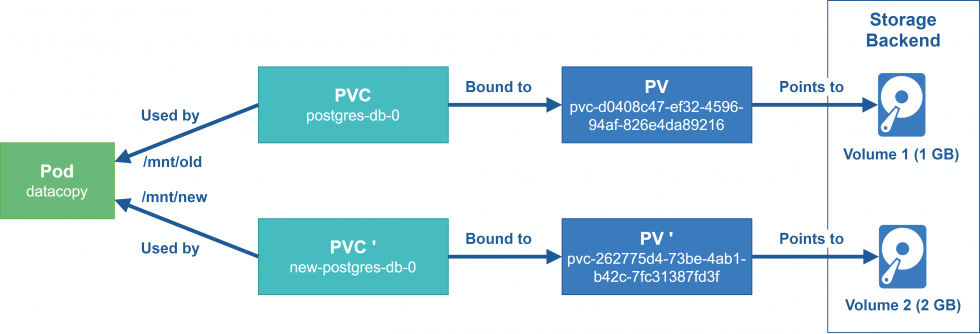

Define the YAML manifest for the temporary Pod, which is going to be used for the copy process (pod_datacopy.yaml):

apiVersion: v1

kind: Pod

metadata:

name: datacopy

namespace: my-namespace

spec:

volumes:

- name: old-pv

persistentVolumeClaim:

claimName: postgres-db-0

- name: new-pv

persistentVolumeClaim:

claimName: new-postgres-db-0

containers:

- name: datacopy

image: ubuntu

command:

- "sleep"

- "36000"

volumeMounts:

- mountPath: "/mnt/old"

name: old-pv

- mountPath: "/mnt/new"

name: new-pv

Apply the manifest to start the Pod. Once it’s up, exec into the Pod, and start a shell:

user@host:/tmp$ kubectl apply -f pod_datacopy.yaml # Wait a few seconds while the Pods gets started... user@host:/tmp$ kubectl exec -it datacopy -- /bin/bash

Inside the Pod, verify that the mount point of the old PV contains the old data, and the mount point of the new PV is empty. Afterwards, invoke a “bulletproof” tar-based copy command to ensures that ownership and permissions are carried over:

root@datacopy:/# cd /mnt/ root@datacopy:/mnt# ls -la total 8 drwxr-xr-x. 1 root root 28 Jul 7 18:57 . drwxr-xr-x. 1 root root 28 Jul 7 18:57 .. drwxrwxrwx. 2 99 99 4096 Jul 7 18:53 new drwxrwxrwx. 3 99 99 4096 Jul 7 18:47 old root@datacopy:/mnt# ls -la old/ total 8 drwxrwxrwx. 3 99 99 4096 Jul 7 18:47 . drwxr-xr-x. 1 root root 28 Jul 7 18:57 .. drwx------. 19 999 99 4096 Jul 7 18:51 pgdata root@datacopy:/mnt# ls -la new/ total 4 drwxrwxrwx. 2 99 99 4096 Jul 7 18:53 . drwxr-xr-x. 1 root root 28 Jul 7 18:57 .. root@datacopy:/mnt# (cd /mnt/old; tar -cf - .) | (cd /mnt/new; tar -xpf -)

Once the command finishes, verify that all the data is present inside the mount point of the new PV with proper ownership and permissions. Exit the copy Pod and discard it. All the data now resides directly in both PVs.

root@datacopy:/mnt# ls -la new/ total 8 drwxrwxrwx. 3 99 99 4096 Jul 7 18:47 . drwxr-xr-x. 1 root root 28 Jul 7 18:57 .. drwx------. 19 999 99 4096 Jul 7 18:51 pgdata root@datacopy:/mnt# exit exit user@host:/tmp$ kubectl delete pod datacopy pod "datacopy" deleted

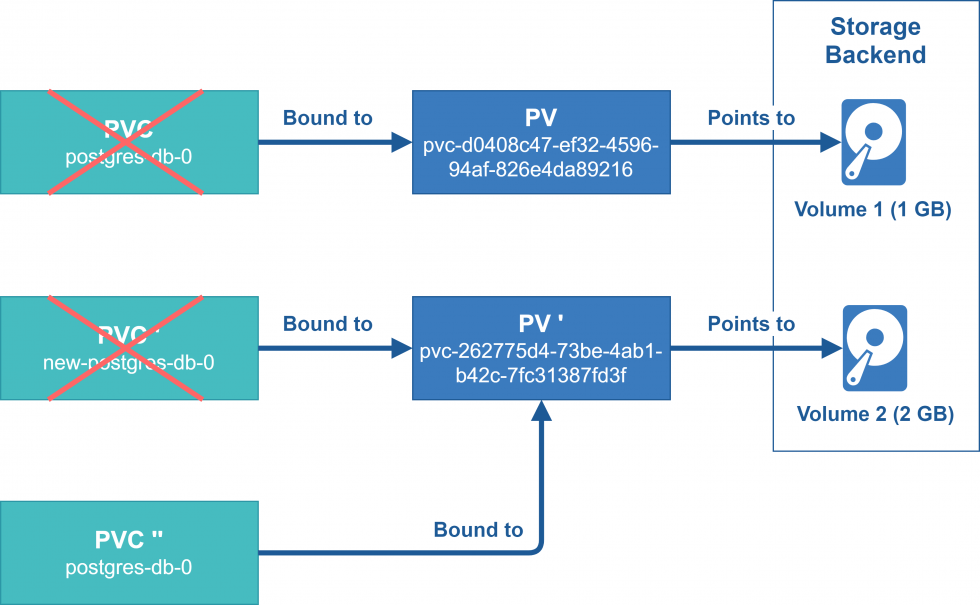

At this point, we’d like to re-use the old PVC and point it to the new PV, in order to keep changes for the rest of the application to a minimum. Alas, the binding between PVC and PV is immutable. Instead, we have to delete the current PVC, and re-create it with the same name, but referencing the new PV. But careful here! Before starting to delete anything, let’s see what could happen to the old PV and its data once its bound PVC gets removed. By default, every PV has its reclaim policy set to Delete, meaning we’d also lose the PV as soon as we delete the PVC. However, we’d rather keep the PV around until we’re certain the migration went ok, and we won’t have to rollback.

A simple kubectl describe pv <pv-name> command shows the current reclaimPolicy for a PV. Each StorageClass can define a separate default for the reclaimPolicy of its PVs (more details here). In order to override the reclaimPolicy on the PV level, simply run the following command (more details here):

kubectl patch pv <pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"<reclaimpolicy-for-the-pv>"}}'

Note: This spec.persistentVolumeReclaimPolicy patch command can be run at any time as it does not have any impact on the PV’s availability or stability.

To avoid premature deletion of any data or PV, set the reclaim policy of the old and new PV to Retain:

# Old PV

user@host:/tmp$ kubectl describe pv pvc-d0408c47-ef32-4596-94af-826e4da89216

Name: pvc-d0408c47-ef32-4596-94af-826e4da89216

...

StorageClass: sc-default

Status: Bound

Claim: my-namespace/postgres-db-0

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 1Gi

...

user@host:/tmp$ kubectl patch pv pvc-d0408c47-ef32-4596-94af-826e4da89216 -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

persistentvolume/pvc-d0408c47-ef32-4596-94af-826e4da89216 patched

# New PV

user@host:/tmp$ kubectl describe pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f

Name: pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f

...

StorageClass: sc-default

Status: Bound

Claim: my-namespace/new-postgres-db-0

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 2Gi

...

user@host:/tmp$ kubectl patch pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

persistentvolume/pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f patched

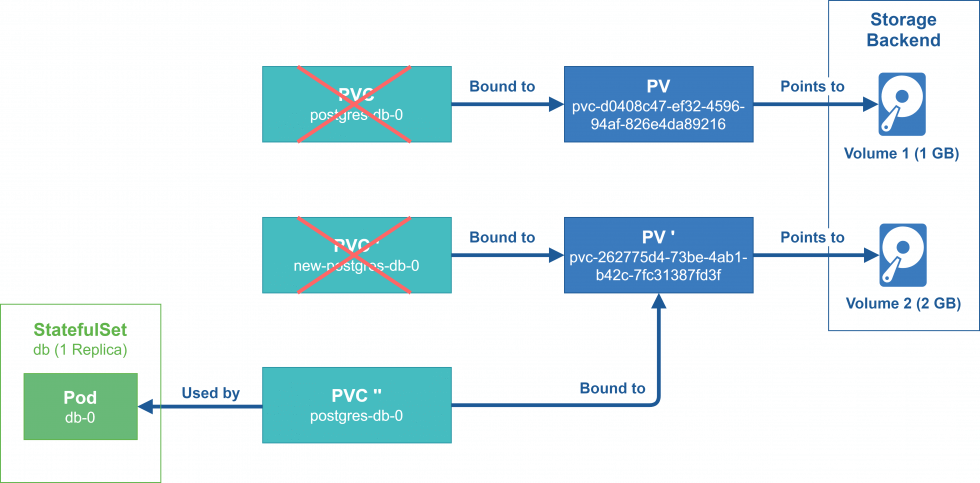

Finally, we are able to delete the old and new PVC, and create a third PVC that is essentially a merged version of both, and can be used to transparently direct our application workload to the new PV.

Delete the first two PVCs, so we can re-use the name of the original PVC, and make sure the new PV is no longer bound:

user@host:/tmp$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE new-postgres-db-0 Bound pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f 2Gi RWO sc-default 12m postgres-db-0 Bound pvc-d0408c47-ef32-4596-94af-826e4da89216 1Gi RWO sc-default 18m user@host:/tmp$ kubectl delete pvc new-postgres-db-0 postgres-db-0 persistentvolumeclaim "new-postgres-db-0" deleted persistentvolumeclaim "postgres-db-0" deleted

Before we now start creating our third (& final) PVC, we must ensure that this PVC is able to be bound to the new PV at all. As a closer look reveals, the new PV is currently in the status Released:

user@host:/tmp$ kubectl describe pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f Name: pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f ... StorageClass: sc-default Status: Released Claim: my-namespace/new-postgres-db-0 Reclaim Policy: Retain Access Modes: RWO VolumeMode: Filesystem Capacity: 2Gi ...

That’s because the PV still references the deleted PVC in its spec.claimRef:

user@host:/tmp$ kubectl get pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f -o yaml

apiVersion: v1

kind: PersistentVolume

metadata:

...

name: pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f

...

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 2Gi

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: new-postgres-db-0

namespace: my-namespace

resourceVersion: "197807259"

uid: 262775d4-73be-4ab1-b42c-7fc31387fd3f

...

persistentVolumeReclaimPolicy: Retain

storageClassName: sc-default

volumeMode: Filesystem

To fix this, the stray reference needs to be wiped from the PV’s spec. This can be done interactively with kubectl edit pv <pv-name>, and removing the section spec.claimRef as a whole. Subsequently, kubectl describe pv <pv-name> should show the PV is in the state Available. This means it can now be referred to once again from a new PVC – our final PVC.

# Before edit: user@host:/tmp$ kubectl describe pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f Name: pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f ... StorageClass: sc-default Status: Released Claim: my-namespace/new-postgres-db-0 Reclaim Policy: Retain Access Modes: RWO VolumeMode: Filesystem Capacity: 2Gi # Remove the spec.claimRef: user@host:/tmp$ kubectl edit pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f persistentvolume/pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f edited # After edit: user@host:/tmp$ kubectl describe pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f Name: pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f ... StorageClass: sc-default Status: Available Claim: Reclaim Policy: Retain Access Modes: RWO VolumeMode: Filesystem Capacity: 2Gi

We want the third PVC to resemble the original PVC as closely as possible, but point it to the new PV (and its properties) instead. Hence, its manifest (pvc_postgres-db-0.yaml) takes into account the following key points:

- Set the PVC name to the exact same name as your first PVC had.

- Set the

spec.volumeNameto the name of the new PV. - Take over

spec.resources.requests.storage,spec.accessModeandspec.storageClassNamevalues as already configured on the second PVC. - Apply all relevant

metadata.annotationsandmetadata.labelsfrom the first PVC, as these values could have an impact on the application’s PVC deployment (Helm chart, etc.).

In the end, the final PVC should look something like this:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-db-0

namespace: my-namespace

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: sc-default

volumeMode: Filesystem

volumeName: pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f

Apply the final PVC. A few seconds later, the PVC and PV both should show up in status Bound:

user@host:/tmp$ kubectl apply -f pvc_postgres-db-0.yaml user@host:/tmp$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE postgres-db-0 Bound pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f 2Gi RWO sc-default 3s user@host:/tmp$ kubectl get pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f 2Gi RWO Retain Bound my-namespace/postgres-db-0 sc-default 20m

Last but not least, the application’s replica count can be scaled back to its original value:

kubectl scale statefulset --replicas=1 db

To validate if the migration was successful, run the following commands to see if the application Pod runs into any PV/PVC related issues:

user@host:/tmp$ kubectl describe pod db-0

Name: db-0

Namespace: my-namespace

Priority: 0

Node: worker02/10.100.10.11

Start Time: Wed, 07 Jul 2021 21:15:01 +0200

Labels: app=postgres-db

controller-revision-hash=db-6b9f597586

statefulset.kubernetes.io/pod-name=db-0

Annotations: cni.projectcalico.org/podIP: 10.42.19.79/32

cni.projectcalico.org/podIPs: 10.42.19.79/32

kubernetes.io/psp: default-psp

Status: Running

IP: 10.42.19.79

IPs:

IP: 10.42.19.79

Controlled By: StatefulSet/db

Containers:

postgre:

Container ID: docker://259dd9c9c4b1328685dcff66d0fc5ce22499b99e55f3e50e25b122b49cf9d072

Image: postgres

Image ID: docker-pullable://postgres@sha256:c5943760916b897e73906d31b13236f6788376da64a2996c8944e6dbbbd418c8

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 07 Jul 2021 21:15:38 +0200

Ready: True

Restart Count: 0

Environment:

PGDATA: /data/pgdata

POSTGRES_PASSWORD: password

Mounts:

/data from postgres (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-h2bb6 (ro)

...

Volumes:

postgres:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: postgres-db-0

ReadOnly: false

default-token-h2bb6:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-h2bb6

Optional: false

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 63s default-scheduler Successfully assigned my-namespace/db-0 to worker02

Normal SuccessfulAttachVolume 64s attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f"

Normal Pulling 61s kubelet Pulling image "postgres"

Normal Pulled 28s kubelet Successfully pulled image "postgres"

Normal Created 27s kubelet Created container postgre

Normal Started 27s kubelet Started container postgre

user@host:/tmp$ kubectl logs -f db-0

PostgreSQL Database directory appears to contain a database; Skipping initialization

2021-07-07 19:15:38.576 UTC [1] LOG: starting PostgreSQL 13.3 (Debian 13.3-1.pgdg100+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 8.3.0-6) 8.3.0, 64-bit

2021-07-07 19:15:38.576 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

2021-07-07 19:15:38.576 UTC [1] LOG: listening on IPv6 address "::", port 5432

2021-07-07 19:15:38.590 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

2021-07-07 19:15:38.621 UTC [26] LOG: database system was shut down at 2021-07-07 18:51:42 UTC

2021-07-07 19:15:38.672 UTC [1] LOG: database system is ready to accept connections

^C

As the migration is now complete, and the temporary override is no longer required, change the PV’s reclaim policy back to the StorageClass’s default value:

user@host:/tmp$ kubectl patch pv pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f -p '{"spec":{"persistentVolumeReclaimPolicy":"Delete"}}'

persistentvolume/pvc-262775d4-73be-4ab1-b42c-7fc31387fd3f patched

Once all is well, also consider cleaning up the old PV and its data:

user@host:/tmp$ kubectl delete pv pvc-d0408c47-ef32-4596-94af-826e4da89216 persistentvolume "pvc-d0408c47-ef32-4596-94af-826e4da89216" deleted